WiKA: A Vision Based Sign Language Recognition from Extracted Hand Joint Features using DeepLabCut

Abstract

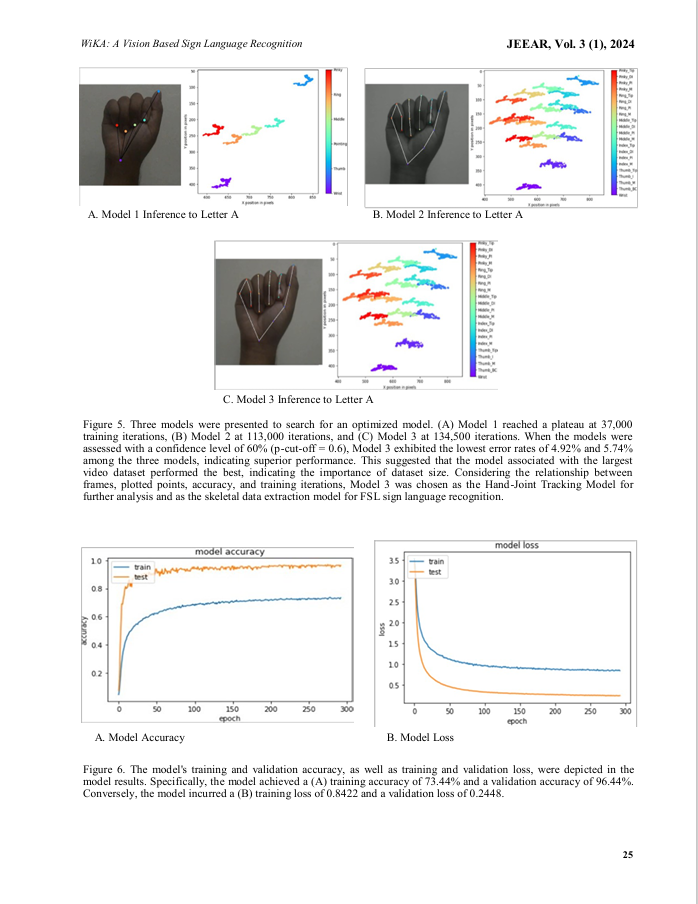

The use of hand gesture recognition for sign language translation to address the communication gap between the hearing majority and the deaf population has had significant breakthroughs over the years. While the contact-based approach uses wearable devices, a vision-based solution is preferred owing to the convenience it offers and since it obviates the need for complicated gears. This study presents the development of WiKA, an open-source software designed to track the joints of the hands and interpret them into their corresponding sign language counterparts. DeepLabCut, a markerless pose estimation software, was employed to develop the Hand-Joint Tracking Model through the training of a sequential Convolutional Neural Network, utilizing extracted Hand-Joint features to predict the sign language alphabets (A-Z) and numbers (1-9) based on the positioning of the joints. The developed Hand-Joint tracking model exhibited a 4.92% training error and a 5.74% test error with a p-cut-off of 60%. On the other hand, the developed sign language recognition achieved a 96.44% prediction accuracy with only 0.0356% misclass. This model can be further integrated into mobile phones for seamless conversations between the signing and non-signing populations.